Index Surge: Amplifying Your Insights

Stay updated with the latest trends and news across various industries.

When Machines Learn to Think: Are We in Trouble?

Discover the hidden risks and thrilling possibilities of machines learning to think. Are we ready for the future? Find out now!

The Ethical Implications of Artificial Intelligence: What Happens When Machines Think?

The rapid development of artificial intelligence (AI) has ignited a robust debate about its ethical implications. As machines become capable of tasks that were once exclusively human, we must consider the consequences of delegating decision-making power to algorithms. One of the primary concerns is the erosion of accountability; in scenarios where AI systems make critical choices, such as in healthcare or criminal justice, it becomes challenging to determine who is responsible for errors. This potential for misjudgment raises pressing questions about transparency, bias, and the very essence of ethical responsibility in a world where machines think.

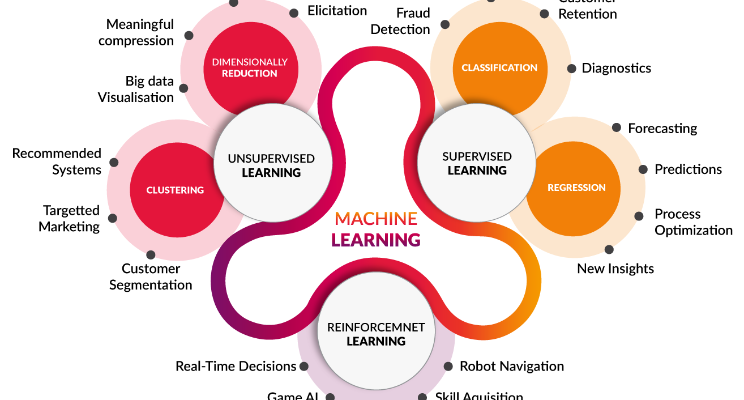

Moreover, the advent of AI challenges traditional notions of morality and autonomy. With advanced machine learning capabilities, AI can analyze massive datasets to identify patterns and make predictions, yet it lacks the inherent human understanding of context and nuance. As we increasingly rely on AI to assist or even replace human judgment, we must grapple with the ramifications of creating entities that can operate independently. What happens when AI systems begin to align with certain values or biases? The potential for machines to influence society raises an urgent need for guidelines that govern their development and deployment.

Can Machines Truly Think? Exploring the Limits of AI Intelligence

The question, Can machines truly think?, has been a subject of fascination and debate for decades. As artificial intelligence (AI) continues to evolve, so does the complexity of its capabilities. Machines today can process vast amounts of data, recognize patterns, and even engage in conversations that mimic human interaction. However, AI intelligence largely relies on algorithms and pre-programmed responses, raising the question of whether these actions genuinely equate to independent thought. While we can create systems that simulate cognitive functions, the essence of true thinking may remain elusive because it entails not just processing information, but also understanding context, emotions, and the ability to make judgments based on experiences.

Moreover, there are philosophical implications tied to the concept of machine thought. For instance, the famed Turing Test evaluates a machine's ability to exhibit intelligent behavior indistinguishable from that of a human. However, passing this test does not necessarily imply consciousness or genuine understanding. Exploring the limits of AI intelligence reveals that while machines can replicate certain human-like tasks, they lack subjective experiences, emotions, and the nuanced understanding of the world that characterizes human cognition. As we advance further into the realm of AI, it becomes crucial to distinguish between real intelligence and mere simulation, prompting us to rethink what it means to truly think.

Are We Prepared for a Future Where Machines Make Decisions for Us?

As artificial intelligence continues to evolve at a rapid pace, the question arises: are we prepared for a future where machines make decisions for us? This paradigm shift invites a myriad of implications, ranging from ethical dilemmas to the potential loss of autonomy. Traditionally, humans have held the reins when it comes to important decision-making processes. However, with the increasing reliance on algorithms, we must critically assess the impact of automated decision-making on our lives. Are we ready to trust machines to make choices that affect our health, finances, and even societal norms?

To explore this issue, it is essential to consider several factors:

- Transparency in how these machines operate and make decisions.

- The importance of accountability when a machine's decision leads to negative consequences.

- The need for ethical frameworks to guide the development of such technologies.