Index Surge: Amplifying Your Insights

Stay updated with the latest trends and news across various industries.

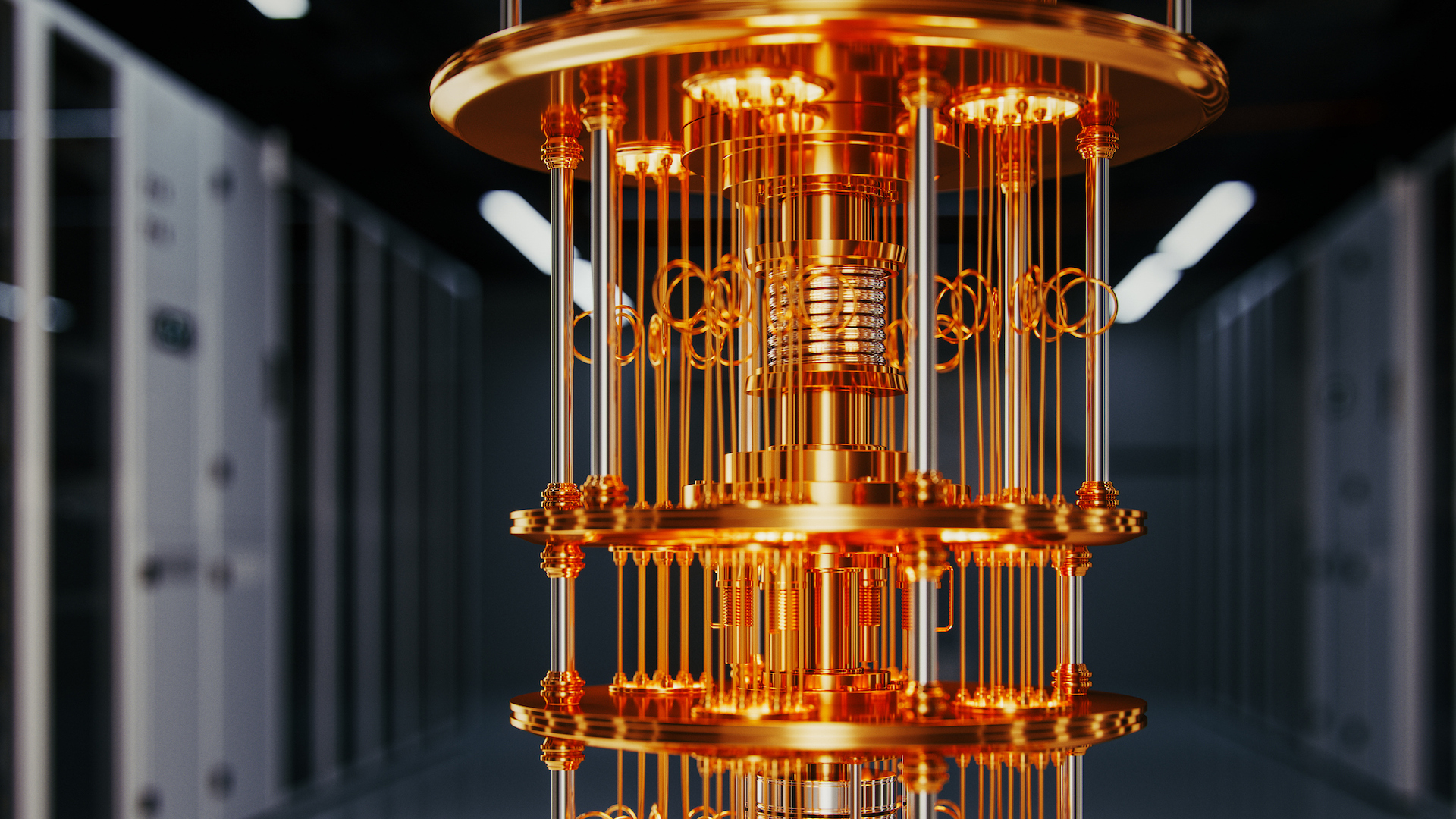

Quantum Computing: The Future or Just a Quantum Fad?

Is quantum computing the game-changer we’ve been waiting for or just another tech trend? Explore the future of this fascinating field!

Understanding Quantum Computing: How It Works and Its Potential Impact

Understanding Quantum Computing involves grasping the fundamental principles of quantum mechanics, which govern the behavior of matter and energy at the smallest scales. Unlike classical computers that use bits as the smallest unit of data (0s and 1s), quantum computers utilize quantum bits, or qubits, which can exist in multiple states simultaneously due to phenomena such as superposition and entanglement. This allows quantum computers to perform complex calculations at unprecedented speeds, making them exceptionally powerful for tasks like cryptography, material science, and optimization problems.

The potential impact of quantum computing is vast and multifaceted. Industries such as pharmaceuticals could revolutionize drug discovery through more accurate molecular simulations, while financial institutions might employ quantum algorithms for risk analysis and investment strategies. Moreover, quantum computing could also pose challenges for current encryption methods, necessitating a shift towards quantum-resistant algorithms to safeguard sensitive information. As research continues to advance, embracing quantum technologies may redefine the boundaries of what's possible in computing.

Is Quantum Computing Just a Trend? Debunking Myths and Realities

The rise of quantum computing has sparked debates about whether it represents a genuine technological advancement or merely a passing trend. Proponents argue that the unique capabilities of quantum processors, such as superposition and entanglement, will enable breakthroughs in fields like cryptography, drug discovery, and complex system modeling. Unlike classical computers, quantum computers can process vast amounts of information simultaneously, which positions them to solve problems currently deemed intractable. However, skepticism persists, with critics suggesting that many claims surrounding quantum computing are exaggerated or based on unrealistic timelines for its applicability.

To evaluate the future of quantum computing, it's essential to distinguish between its potential and the current state of technology. While many early-stage startups and established tech giants are investing heavily in this field, the transition from theory to practical applications remains challenging. Myths about quantum computing often stem from misunderstandings of its capabilities and limitations. For example, the idea that quantum computers will outright replace classical computers is misleading; rather, they are expected to complement existing technology by tackling specific types of problems more efficiently. As research progresses and more tangible use cases emerge, it will become clearer whether quantum computing is truly a lasting innovation or just a fleeting trend.

The Future of Technology: Will Quantum Computers Replace Classical Computers?

The future of technology is often a topic of great speculation, and one of the most intriguing questions that arises is whether quantum computers will replace classical computers. Classical computers, which rely on bits as the smallest unit of data, function on a binary system of 0s and 1s. In contrast, quantum computers uses qubits, which can exist in multiple states simultaneously due to the principles of superposition and entanglement. This allows them to perform complex calculations at speeds unattainable by traditional computers. As research progresses and quantum technologies advance, we may see a paradigm shift in computing power that could revolutionize fields like cryptography, materials science, and artificial intelligence.

However, while quantum computers present remarkable advantages, it remains uncertain whether they will entirely replace classical systems or merely complement them. The transition to quantum computing will likely depend on several factors, including the development of practical algorithms and error correction methods. Additionally, the existing infrastructure for classical computing is deeply ingrained in our society, making a complete replacement improbable in the near term. Therefore, it is conceivable that, in the future, we will witness a hybrid approach where quantum and classical computers work in tandem, each serving specific purposes based on their strengths.